Skills

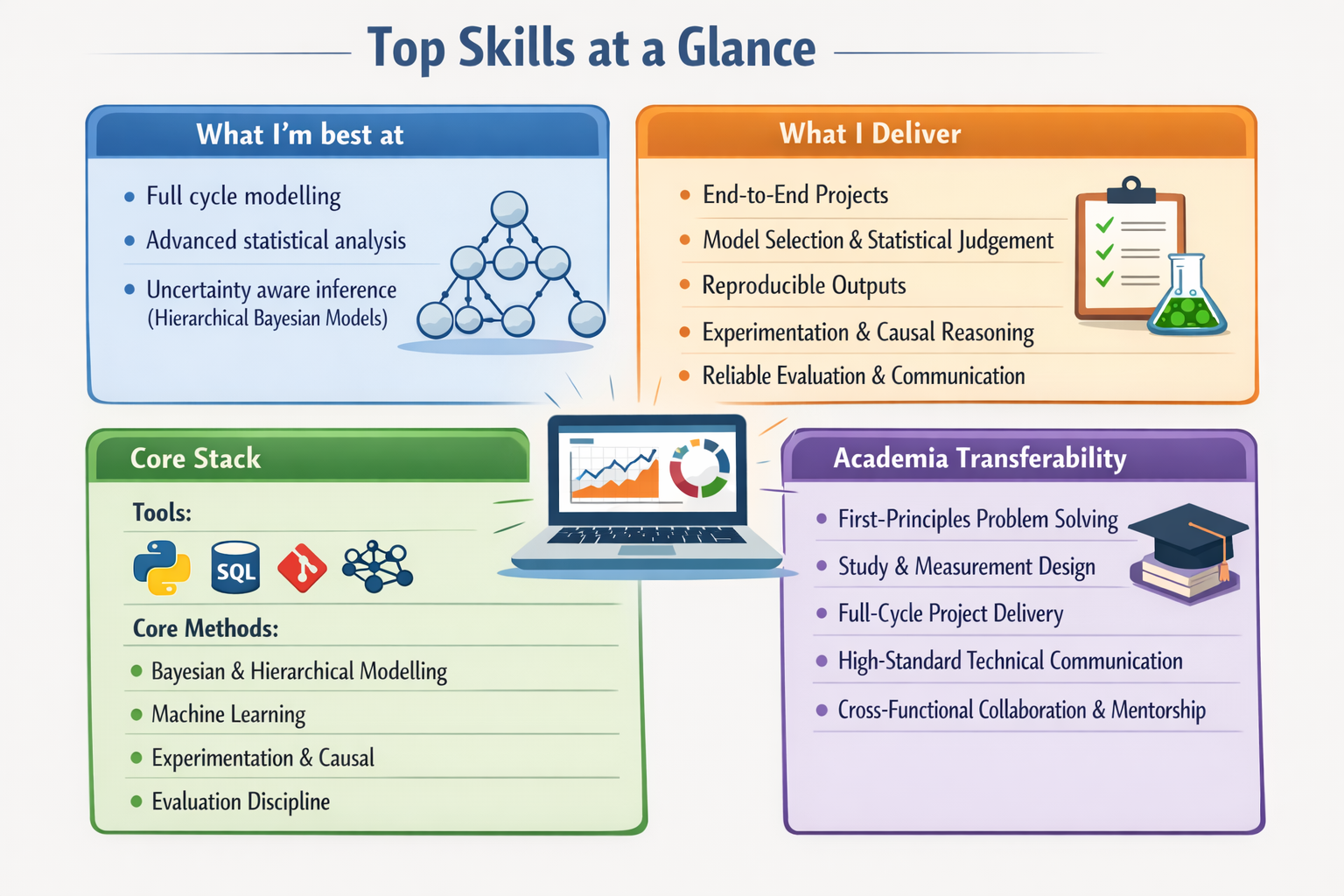

What I’m best at

I deliver end-to-end modelling: define the decision and metrics, build the data foundation, develop features and models, validate rigorously, and ship reproducible outputs. Strength: statistical judgement and uncertainty-aware inference (especially hierarchical/Bayesian), useful when data are noisy, sparse, biased, or structured in space/time.

What I deliver

- Full-cycle modelling delivery: define objectives and success metrics, design the data foundation, engineer features, train models, validate rigorously, and deliver reproducible outputs that support decisions.

- Model selection with statistical judgement: select model families aligned to the data-generating process, including structured dependence across space and time.

- Bayesian hierarchical inference: partial pooling and principled uncertainty propagation for robust estimates and uncertainty-aware decision-making under sparse, noisy, or biased data.

- Experimentation and causal reasoning: A/B testing fundamentals, power and effect-size framing, and clear treatment of confounding, selection bias, and the limits of identification.

- Reliable evaluation and communication: leakage checks, calibration awareness, error slicing, robustness and stress testing, and transparent reporting of assumptions, tradeoffs, and limitations.

Core stack

Tools

- Python: pandas, NumPy, scikit-learn; pipelines; visualisation (matplotlib/seaborn/plotly); Keras, TensorFlow

- SQL (PostgreSQL): joins, CTEs, window functions; analytics transformations

- Software engineering: Git/GitHub; modular code; testing/validation; reproducible environments (conda/venv)

- LLM engineering: structured prompting, tool calling, RAG foundations, agent orchestration (LangGraph; Python), API integration

Core methods

- Bayesian & hierarchical modelling: partial pooling, uncertainty quantification and propagation

- Machine learning: supervised/unsupervised, model selection, tuning, gradient boosting (incl. XGBoost)

- Experimentation & causal: A/B testing, power/effect size, confounding, selection bias

- Evaluation discipline: leakage, calibration, error analysis, robustness

Academia transferability

- First-principles problem solving: break down open-ended questions, formalise hypotheses, and select methods aligned to the data-generating process.

- Study and measurement design: design sampling and measurement protocols, define data quality standards, and handle bias, missingness, and uncertainty at the source.

- Full-cycle project delivery: scope work, set milestones, manage tradeoffs, and deliver high-quality outcomes under real constraints.

- High-standard technical communication: publish peer-reviewed work and produce clear, auditable narratives with explicit assumptions, evidence, and limitations.

- Cross-functional collaboration and mentorship: work across disciplines, mentor and upskill collaborators, and drive alignment through proposal-style writing and resourcing justification (grants/funding).

Technical depth

Bayesian, hierarchical & spatiotemporal modelling

- Generalised linear models (GLMs) and generalised additive models (GAMs) for nonlinear effects

- Threshold and segmented regression for decision-point inference

- Hierarchical and mixed-effects modelling; partial pooling

- Bayesian inference with uncertainty quantification and propagation; priors as explicit assumptions

- Spatiotemporal modelling: structured dependence, forecasting logic, species distribution modelling (SDMs)

- Observation vs process modelling: detection–abundance separation; N-mixture models

- Integrated Population Models (IPMs)

LLMs, prompt engineering & agents

- Prompting for structured outputs; reliability patterns (prompt scaffolds, self-checks, evaluation loops)

- Tool calling and retrieval patterns; schema/contract design for model outputs

- Agent workflows: planning/acting loops, orchestration, retries, human-in-the-loop checkpoints

- LangGraph concepts: state, control flow, tracing/debugging

- OpenAI API integration patterns for prompt-driven applications

Machine learning (classical)

- Supervised learning: linear/logistic regression, tree-based models, random forests, gradient boosting (incl. XGBoost), SVM, k-NN

- Unsupervised learning: PCA, clustering (K-Means), anomaly detection

- scikit-learn Pipelines; hyperparameter tuning; model comparison and baselines

Deep learning

- Neural networks for classification and regression

- Training fundamentals: loss functions, optimisers, regularisation, monitoring and early stopping

- TensorFlow / Keras: model definition, training, evaluation

Evaluation, interpretability & reporting

- Validation design: train/val/test, cross-validation, temporal/blocked splits where appropriate

- Evaluation discipline: leakage checks, calibration awareness, error analysis and slicing, robustness/stress testing

- Interpretability: feature importance, partial dependence, SHAP-style global/local explanations

- Decision-ready reporting: assumptions, limitations, tradeoffs, and clear recommendations

Experimentation & causal inference

- A/B testing fundamentals: hypotheses, metrics, power and effect size

- Causal inference basics: confounding, selection bias, counterfactual framing, limits of identification

- Practical decision-making under uncertainty: interpreting results and communicating tradeoffs

Data engineering & integration

- Data ingestion and transformation: structured files, schema discipline, reliable I/O

- SQL-centric data work: joins across complex relational datasets, analytics transformations

- API integration patterns: extracting, normalising, and joining external data sources

Software engineering & reproducibility

- Git/GitHub workflows: branching, pull requests, code review, merge discipline

- Maintainable codebases: modular architecture, clean interfaces, reusable components, pipeline-style structure

- Quality controls: input validation, assertions, unit tests (pytest patterns), docstrings, type hints where useful

- Reproducibility: environment management (conda/venv), deterministic runs, versioned artefacts, methods-first documentation

NLP, recommenders & text features

- Text preprocessing and inspection: normalisation, tokenisation, feature auditing

- Vectorisation: bag-of-words and TF-IDF; baseline classifiers (Naive Bayes)

- Recommender foundations: similarity metrics, collaborative filtering, constraint-aware framing

Cloud (AWS)

- Foundational understanding of AWS cloud concepts: shared responsibility, high availability, scalability/elasticity, and core tradeoffs

- Core AWS services: EC2, Lambda, S3, EBS, EFS, RDS, DynamoDB

- Networking & access fundamentals (high level): VPC, subnets, security groups, NACLs, Internet Gateway, NAT Gateway

- Observability & governance (high level): CloudWatch, CloudTrail, AWS Organizations, Cost Explorer, Budgets

Geospatial & remote sensing

- Raster/vector workflows; spatial joins; geoprocessing pipelines

- Spatial feature engineering; landscape/canopy metrics

- Scalable spatial processing

Visualisation & lightweight apps

- Visualisation: matplotlib, seaborn, plotly; ggplot2

- Lightweight apps: Streamlit (Python), Shiny (R)

Education & Training

A detailed, certificate-linked list of formal education and courses is maintained here: Education & Training.